Comparing vision feature of Gemini and GPT

Comparing Vision Features of Gemini and GPT

In the field of computer vision and artificial intelligence, two models have been making waves: Gemini and GPT. The following article, https://arxiv.org/abs/2312.15011, compares these two models, their strengths, weaknesses, and implications for the broader field.

Table of Contents

- Performance Comparison

- Strengths and Weaknesses

- Novel Task: Image Editing

- Implications for Computer Vision and AI

Performance Comparison

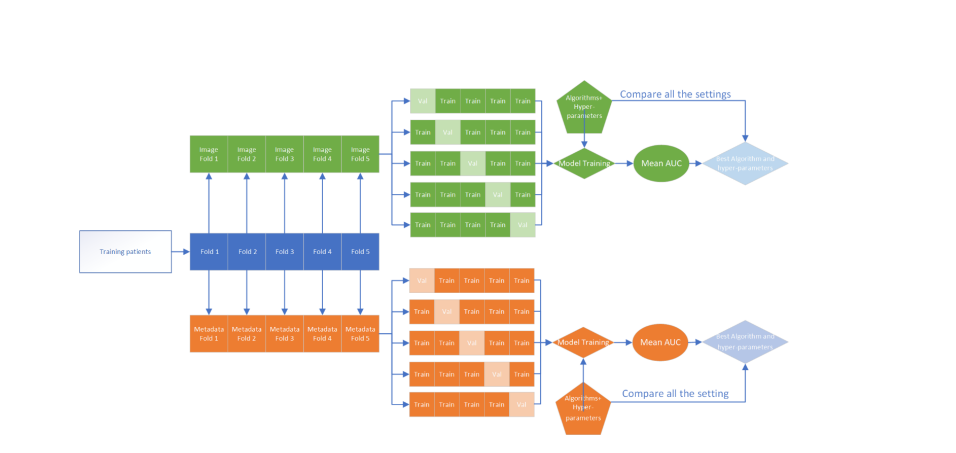

Gemini and GPT have been evaluated on a range of vision tasks, including image generation, classification, segmentation, and captioning. The results of these evaluations, both quantitative and qualitative, are discussed in this section.

Strengths and Weaknesses

Each model has its own strengths and weaknesses. Gemini, with its transformer architecture and dual encoder-decoder, can leverage both global and local information, handle multimodal inputs and outputs, and generate diverse and coherent images. On the other hand, GPT, with its single autoregressive decoder, has its own set of advantages and disadvantages.

Novel Task: Image Editing

The paper proposes a novel task of image editing, where the model has to modify an existing image according to a natural language instruction. This task presents a new challenge for both models and opens up a new avenue for research.

Implications for Computer Vision and AI

The results of the comparison have far-reaching implications for the broader field of computer vision and artificial intelligence. These implications, as well as the potential directions for future research, are discussed in this section.